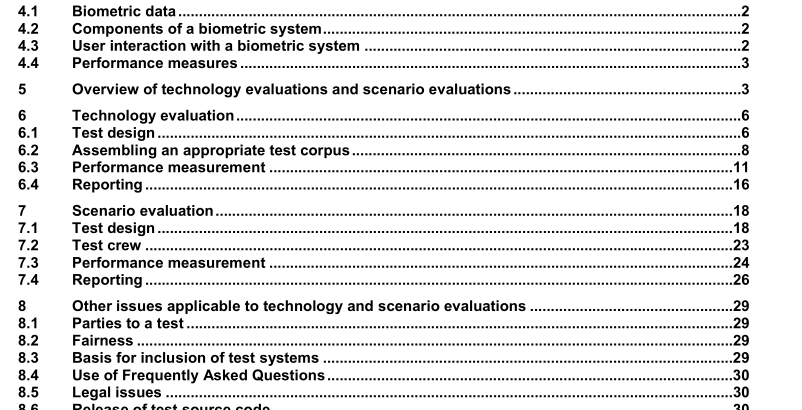

AS ISO IEC 19795.2:2010 pdf download – Information technology—Biometric performance testing and reporting Part 2: Testing methodologies for technology and scenario evaluation

5 Overview of technology evaluations and scenario evaluations This standard addresses two types of evaluation methodologies: technology evaluations and scenario evaluations. A test report shall state whether it presents results from a technology evaluation, a scenario evaluation, or an evaluation that combines aspects of both technology and scenario evaluations. Technology evaluation is the offline evaluation of one or more algorithms for the same biometric modality using a pre-existing or specially-collected corpus of samples. The utility of technology testing stems from its separation of the human-sensor acquisition interaction and the recognition process, whose benefits include the following:

⎯ Ability to conduct full cross-comparison tests. Technology evaluation affords the possibility to use the entire testing population as claimants to the identities of all other members (i.e. impostors) and this allows estimates of false match rates to be made to on the order of one in N 2 , rather than one in N.

⎯ Ability to conduct exploratory testing. Technology evaluation can be run with no real-time output demands, and is thus well-suited to research and development. For example, the effects of algorithmic improvements, changes in run time parameters such as effort levels and configurations, or different image databases, can be measured in, essentially, a closed-loop improvement cycle.

⎯ Ability to conduct multi-instance and multi-algorithmic testing. By using common test procedures, interfaces, and metrics, technology evaluation affords the possibility to conduct repeatable evaluations of multi-instance systems (e.g. three views of a face) and multi-algorithmic (e.g. supplier A and supplier B) performance, or any combination thereof.

⎯ Provided the corpus contains appropriate sample data, technology testing is potentially capable of testing all modules subsequent to the human-sensor interface, including: a quality control and feedback module(s), signal processing module(s), image fusion module(s) (for multi-modal or multi-instance biometrics), feature extraction and normalization module(s), feature-level fusion module(s), comparison score computation and fusion module(s), and score normalization module(s).

⎯ The nondeterministic aspects of the human-sensor interaction preclude true repeatability and this complicates comparative product testing. Elimination of this interaction as a factor in performance measurement allows for repeatable testing. This offline process can be repeated ad infinitum with little marginal cost.

⎯ If sample data is available, performance can be measured over very large target populations, utilizing samples acquired over a period of years.

NOTE 1 Collecting a database of samples for offline enrolment and calculation of comparison scores allows greater control over which samples and attempts are to be used in any transaction.

NOTE 2 Technology evaluation will always involve data storage for later, offline processing. However, with scenario evaluations, online transactions might be simpler for the tester

— the system is operating in its usual manner and storage of samples, although recommended, is not absolutely necessary. Scenario evaluation is the online evaluation of end-to-end system performance in a prototype or simulated application. The utility of scenario testing stems from the inclusion of human-sensor acquisition interaction in conjunction with the enrolment and recognition processes, whose benefits include the following:

⎯ Ability to gauge impact of additional attempts and transactions on system’s ability to enrol and recognize Test Subjects.

⎯ Ability to collect throughput results for enrolment and recognition trials inclusive of presentation and sample capture duration.

NOTE 3 In online evaluations, the Experimenter may decide not to retain biometric samples, reducing storage requirements and in certain cases ensuring fidelity to real-world system operations. However, retention of samples in online tests is recommended for auditing and to enable subsequent offline analysis.

NOTE 4 Testing a biometric system will involve the collection of input images or signals, which are used for biometric reference generation at enrolment and for calculation of comparison scores at later attempts. The images/signals collected can either be used immediately for an online enrolment, verification or identification attempt, or may be stored and used later for offline enrolment, verification or identification.